Ethereum Security Landscape: Threats and Defenses

The Ethereum ecosystem continues to grow rapidly, but with growth comes complexity and risk. Insecure smart contracts, vulnerable infrastructure, and sophisticated attackers can all undermine Ethereum’s security guarantees.

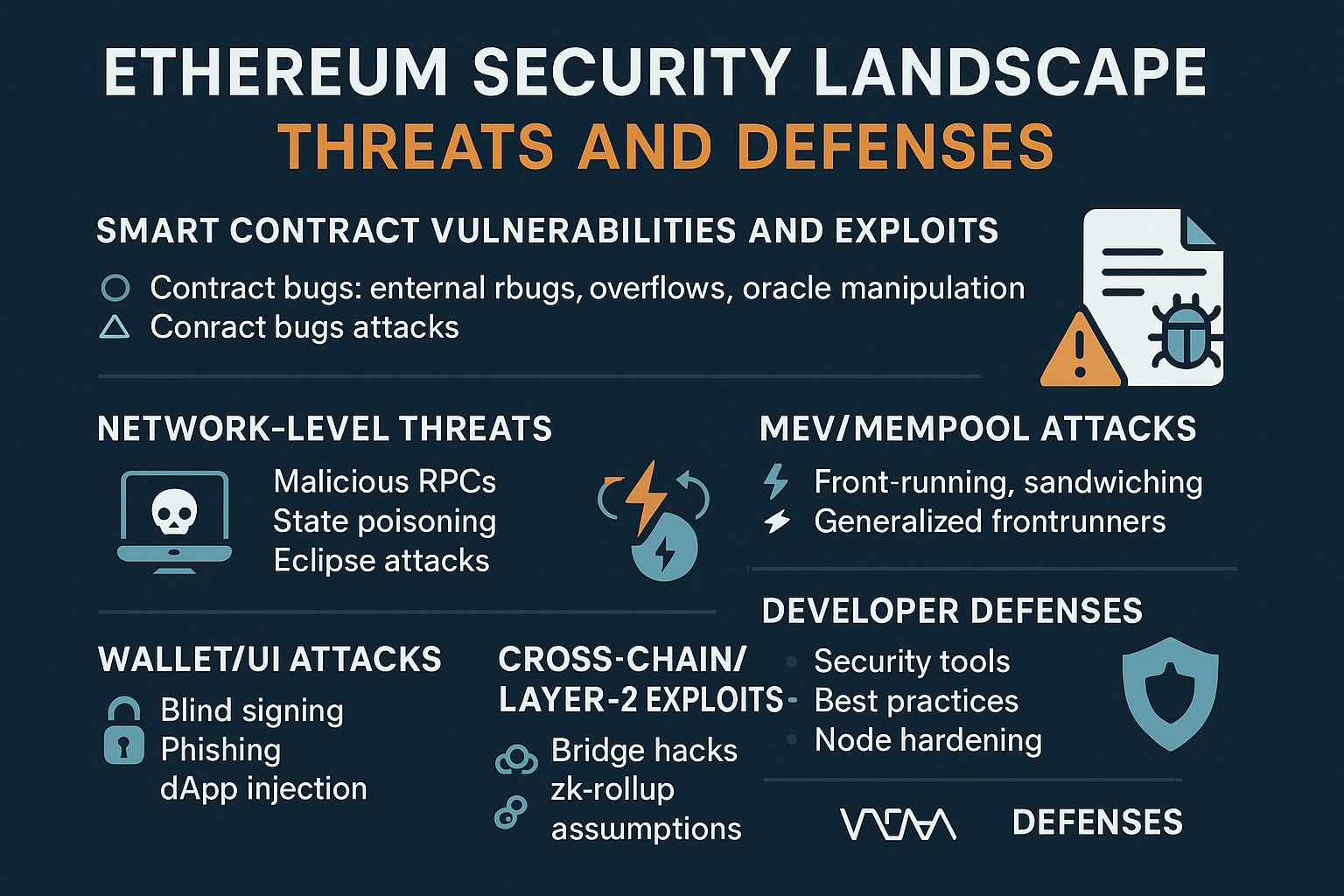

The Ethereum ecosystem continues to grow rapidly, but with growth comes complexity and risk. Insecure smart contracts, vulnerable infrastructure, and sophisticated attackers can all undermine Ethereum’s security guarantees. This in-depth guide surveys the wide range of attack vectors facing Ethereum developers and operators today, with case studies of notable exploits and practical mitigation strategies. We cover everything from contract bugs (reentrancy, access control, overflows, oracle manipulation, etc.) to network-level threats (malicious RPCs, state poisoning, eclipse attacks), MEV/mempool attacks (front-running, sandwiching, generalized frontrunners, Flashbots), wallet/UI attacks (blind signing, phishing, dApp injection), consensus/validator risks (centralization, slashing, censorship), cross-chain/Layer-2 exploits (bridge hacks, zk-rollup assumptions), and developer defenses (security tools, best practices, node hardening). Each section provides technical detail, examples, and references to illustrate how real-world incidents occurred and how similar problems can be prevented.

Smart Contract Vulnerabilities and Exploits

Smart contracts are immutable programs, so bugs in their logic or coding patterns can be devastating. Common smart contract attacks include reentrancy, authorization/Access Control flaws, integer over/underflow, price/oracle manipulation, and more. We examine these with real-case examples.

Reentrancy Attacks

Reentrancy occurs when a contract calls an external untrusted address (such as sending Ether) before updating its own state, allowing the callee to recursively re-enter the vulnerable function and drain funds. The canonical example is The DAO hack (June 2016). The DAO contract held 3.6 million ETH ($150 M) but had a withdrawal pattern that sent Ether before subtracting the user’s balance. A malicious actor wrote a “reentrancy” exploit that repeatedly re-entered the withdraw function via a fallback loop, draining most of The DAO’s funds. In the words of security researchers: “less than 3 months after The DAO’s launch, it was attacked… the hacker drained most of the $150 M worth of ETH from The DAO’s smart contract. The hacker used what has come to be called a ‘reentrancy’ attack”.

A simplified vulnerable contract illustrates the pattern:

mapping(address => uint) public balances;

function deposit() external payable {

balances[msg.sender] += msg.value;

}

function withdraw(uint _amount) external {

require(balances[msg.sender] >= _amount, "Insufficient funds");

// Send Ether before updating balance (vulnerability)

(bool success, ) = msg.sender.call{value: _amount}("");

require(success, "Transfer failed");

balances[msg.sender] -= _amount;

}

In this code, the line that sends Ether (msg.sender.call) occurs before the balance is decreased. An attacker contract can exploit this by re-calling withdraw in its fallback and extracting more than its balance. The fix is to update state before calling external contracts (the checks-effects-interactions pattern) or use Solidity’s transfer/send (with limited gas) or reentrancy guards. For example, swapping the last two lines—subtracting the balance before the call—prevents reentrancy.

Many tools now detect reentrancy risks (e.g. Slither flags “unprotected _send after external call”), and modern best practice is always to follow checks-effects-interactions or use ReentrancyGuard.

Access Control Flaws

Contracts often rely on privileged roles (owner, admin, controller) to restrict critical functions. A missing or broken access control check can allow anyone to perform admin actions (changing parameters, minting tokens, or withdrawing funds). For example, the Parity Multisig wallet bug (July 2017) was essentially an access control/design error: a new version of the library contract had a public initWallet() function that anyone could call, taking ownership of all multisig wallets and ultimately freezing ~500k ETH. If a contract fails to enforce onlyOwner or properly initialize roles, attackers can seize control.

Defenses include using well-audited libraries (OpenZeppelin’s Ownable or AccessControl) and performing thorough access reviews. Automated tools (MythX/Slither) flag functions callable by non-owners or exposed onlyOwner-only code.

Integer Overflow/Underflow

Before Solidity 0.8, arithmetic overflow/underflow could occur silently (e.g. subtracting more than the variable’s value would wrap around to a huge number). A classic exploit was the BecToken bug (2018): a malicious token issuer set mintBalance[msg.sender] = uint(-1), then transferred tokens at no cost, draining tokens. Today, Solidity 0.8+ checks overflow by default, but older contracts (or unchecked code) risk this. The OWASP smart contract guide lists integer bugs as a top risk. The fix is to use SafeMath or built-in checks, and ensure external inputs (e.g. uint256 from oracles or users) cannot cause wrap-around.

Oracle and Price Manipulation

Many contracts trust external price feeds or use on-chain prices for logic. If an attacker can manipulate these inputs, they can exploit the contract. A notable case is Harvest Finance (October 2020). Harvest had vaults relying on Curve pools’ stablecoin prices. The attacker took out a large flash loan (~$17M USDT) and swapped it for USDC via Curve, pushing the USDC price to $1.01. Then with a separate $50M USDC loan, they entered the Harvest vault (which misvalued that USDC as $50.5M) and then reversed the swap, netting a profit. Repeating this, the attacker extracted $24 M from Harvest. As reported:

“The attack used flash loans to convert $17 million USDT into USDC through Curve, temporarily boosting the USDC price to $1.01. The attacker then used another flash loan of $50 million USDC… and after reversing the previous trade, redeemed their Harvest vault shares to receive $50.5 million in USDC — a net profit of $500,000 per cycle… obtaining $24 million in loot”.

Harvest’s issue was not a code bug but a design flaw: an oracle check that failed to recognize the temporary price shift. This is often called a “liquidity attack” or “flash-loan oracle manipulation.” Other examples: in 2020 the bZx protocol suffered repeated flash-loan price manipulations for ETH and LINK. To defend, protocols should use secured oracles (e.g. Chainlink Price Feeds with multiple data sources, or time-weighted averages), limit sensitivity to single large trades, and remove exploitable arbitrage opportunities. The Harvest team later recognized that audits should have flagged this edge case.

Other Smart Contract Risks

Other common vulnerabilities include: unchecked sends (not checking return value of low-level calls), denial-of-service (e.g. loops over dynamic arrays of unknown length), delegatecall injection, self-destruct misuse, and missing invariants. Each class has case studies (see CYFRIN’s DAO hack explainer or OWASP SCVS). The key is rigorous code review and testing.

Infrastructure-Level Risks

Even if contracts are safe, the network and node infrastructure itself has threats. Key risks include malicious or compromised RPC nodes, state poisoning and Eclipse attacks, node misconfigurations, and denial-of-service on nodes or the mempool.

Malicious or Compromised RPC Nodes

Most Ethereum clients (e.g. Geth, Erigon) expose an HTTP/WebSocket RPC API. Developers often use public RPC endpoints (Infura, Alchemy, QuickNode, etc.) or run their own. A malicious or compromised RPC provider could lie about blockchain state, censor transactions, or deliver stale data. For example, if your DApp trusts an RPC node that has been tampered with, it might feed you a bogus transaction history to trick you. Likewise, if a popular provider (like Infura) has a bug or outage, many users are affected. In Nov 2020, Infura’s outage (due to an old Geth client bug) caused wallets and exchanges to fail pending ETH transactions. As Crypto Briefing notes:

“Infura is one of Ethereum’s principal infrastructure providers… while convenient, it means apps are essentially relying on another party… A bug in Geth, when fixed, caused anyone on an old version to split into a minority fork. Transactions failed, and exchanges halted ETH/ERC-20 transfers.”

This highlighted that centralizing on one provider is dangerous. Developers should run diversified and up-to-date node infrastructure. For critical applications, use multiple RPC providers or run local full nodes. Remote nodes also expose your IP and usage patterns; a malicious node could selectively censor or reorder your transactions.

State Poisoning, Eclipse and Fake Peers

Ethereum’s P2P network can be attacked. Eclipse attacks involve isolating a node by surrounding it with malicious peers. An attacker who controls a node’s P2P connections can feed it a falsified view of the chain (state poisoning). For example, malicious peers might give invalid block timestamps or heads. The “timejacking” attack lets an attacker skew a node’s network time by advertising many peers with manipulated timestamps. This could make the node accept a wrong chain head. Similarly, if an attacker controls >50% of your node’s peers, they can hide other honest peers. Both scenarios can lead to delayed syncing, missed transactions, or acceptance of malicious blocks.

A survey of node security warns: “malicious attackers change the network time counter… add many fake peers with inaccurate timestamps” (timejacking) or “Eclipse attacks: attacker controls a large number of IPs to intercept or isolate a node’s messages.” Nodes must use up-to-date client versions (which check timestamps and enforce peer rules) and employ firewall/IPS to prevent networking attacks.

Node Misconfiguration and DDoS

A misconfigured node can be a security hole. For example, leaving an Ethereum client’s JSON-RPC port (8545) open to the public without authentication can allow attackers to call admin methods, drain wallets, or crash the node. Blockchain node security guides emphasize: restrict RPC access by IP, disable unsafe APIs (e.g. personal, debug) on public nodes, and require authentication. Overexposed RPC has led to thefts (developers accidentally exposing hot-wallet endpoints).

Distributed Denial-of-Service (DDoS) attacks are also a threat. Malicious bots or miners can spam the mempool with transactions (as seen in the April 2016 spam attack on Ethereum) or request data from nodes endlessly to exhaust CPU/memory. Nodes should run behind load balancers or DDoS protection (e.g. Cloudflare), and limit incoming RPC requests per IP. Monitoring for unusually high traffic is crucial.

Attacks on Infura/Alchemy/Uptime

Centralized node services like Infura or Alchemy can be single points of failure or targets. In addition to outages, state censorship is a concern: if a provider decided (or was forced) to drop certain transactions (e.g. Tornado Cash-related), users relying on it would not see those txs broadcast. We saw this when some validators began censoring sanctioned Tornado Cash transactions (see Consensus below). Running your own node removes this trust, but requires more maintenance.

In summary, node hardening best practices include: run clients on updated versions, restrict RPC interfaces to trusted apps, whitelist peers, use SSH keys and firewalls, and consider solutions like Teku/Nethermind/Erigon’s RPC security guides. As one node security summary puts it: “Keep the software up to date and review the configurations… employ a web application firewall… run a node in a VM or container with strict permissions.”

MEV and Mempool Exploits

Maximal Extractable Value (MEV) refers to profits miners (or validators) and bots can extract by reordering, front-running, or censoring transactions in the public mempool. Ethereum’s open mempool is a “dark forest” where transactions are visible before inclusion, and predatory bots lurk. Common MEV attacks include front-running, back-running, and sandwich attacks, and more advanced “generalized frontrunners”.

Front-running: A bot sees a pending transaction (e.g. a large DEX swap) and submits its own similar transaction with higher gas to go before it.

Sandwich attacks: The classic DeFi exploit. A bot sees Alice’s swap (say swapping 100 ETH for DAI), then places a front-running buy-order, pushing price up, letting Alice’s trade execute at a worse rate, then back-runs by selling the tokens to Alice at that inflated price. The bot pockets the difference. Coinbase’s explainer calls sandwich bots the “degen menace” – users lost ~$37M to such attacks on Uniswap in 2020.

“A sandwich attack works like this: the attacker spots a pending large token purchase, inserts a buy order before it (front-run), letting the victim raise the price, then immediately sells (back-run) at the higher price. The victim gets fewer tokens; the attacker profits”.

Back-running/liquidation bots: These watch for lucrative transactions (e.g. liquidations, arbitrages) and swiftly place transactions to capture profit after the original.

Generalized frontrunners: As described by Paradigm, some bots scan every pending tx for any arbitrage opportunity, rewriting it to profit even from unrelated contracts. The “dark forest” post explains:

“Generalized frontrunners look for any transaction they can profitably front-run by copying it and replacing addresses; they’ll happily copy any profitable transaction, even internal calls, to extract value.”

In other words, any large pending transaction is a target for these automated MEV bots.

Flashbots and Private Mempools: To mitigate MEV, the community developed Flashbots (a research group with their own auction system). Flashbots allows users to send bundles of transactions directly to miners/validators via a private channel, bypassing the public mempool. This lets users specify ordering and eliminate competing bots. Flashbots Auction’s goal is “to preserve Ethereum’s ideals by creating a permissionless, transparent, fair ecosystem for efficient MEV extraction and frontrunning protection”. In practice, a bundle might include your tx plus the MEV miner’s tip; miners then include the bundle in block without exposing it. Flashbots has also extended to general users: Flashbots Protect is an RPC endpoint that routes your tx privately, preventing opportunistic bots from seeing it.

Example: Users can use Flashbots Protect instead of MetaMask’s public RPC to submit a tx. This sends the tx to a private mempool, hiding it from sandwich bots. In fact, Protect even refunds gas on any MEV extracted by a block builder (so you don’t get sandwich-attacked).

Even so, MEV remains a complex issue. Separation of block building (builders) and proposing (validators) with MEV-Boost has changed the landscape, but miners/validators still profit from MEV. The Flashbots docs warn that unchecked MEV poses a “time-bandit” reorg risk: if old blocks have more MEV, a validator might try reorgs to steal it, threatening finality. Overall, Ethereum devs must assume mempool transparency: users of DEXs and lending protocols should consider tools like private transaction relays and always set prudent slippage/limits.

Wallet and UI-Level Attacks

Even with secure contracts, users and wallets are vulnerable to social and UI attacks. These include blind signing, phishing and spoofed interfaces, and malicious dApp integrations.

Blind Signing and Malicious Signatures

Wallet software often allows signing arbitrary messages (via eth_sign) or meta-transactions. The risk is blind signing: users sign a payload without understanding it, inadvertently approving a malicious transaction. Attackers can craft data that, when blindly signed, authorizes an unintended token transfer. The Token.im blog warns:

“Warning: eth_sign is a blind signing method. The raw signature format is not human-readable; a malicious party can trick you into signing a seemingly innocuous message that actually contains, e.g., a token transfer. The result can be irreversible token loss.”In practice, this could be a DEX order or approval contract call hidden behind a benign prompt. Developers should use EIP-712 typed signing (which renders JSON fields on UI) and educate users to never sign raw hex strings. Wallet UIs must clearly display all fields. On the defense side, wallet providers are building protections: for example, MetaMask’s new “Protect” features and transaction decode aim to warn about dangerous signatures.

Phishing UIs and Fake Wallets

Users can be tricked into using fake wallet software or front-ends. A famous case was a fake MetaMask Android app on Google Play (2019) which posed as an official wallet. In this attack, the app installed “clipboard hijacking” malware: it watched for ETH or BTC addresses in the clipboard and silently replaced them with the attacker’s address. It also stole MetaMask seed phrases. The Coindesk report notes: “The malware… impersonates MetaMask and steals users’ credentials and private keys… It can also intercept wallet addresses copied to the clipboard.” In other words, a user copying an address from a DEX or contract could have it changed mid-paste, sending funds to the thief.

More recently, supply-chain attacks on dApp libraries have caused huge wallet drainers (see next). Users should only download wallets from official sites/stores, double-check URLs, and treat seed phrases/clipboard data as sensitive. Hardware wallets mitigate some phishing but are not immune if the transaction payload is tampered.

Malicious dApp/UIt Injection

Web interfaces can be compromised. One illustrative supply-chain hack (Dec 2023) targeted Ledger’s official JavaScript library used by many dApps. Attackers injected malicious code into the @ledgerhq/connect-kit NPM package. Any dApp pulling that library automatically served the payload to users, draining dozens of front-ends simultaneously. According to Blockaid’s post-mortem:

“The attacker was able to inject a malicious wallet-draining payload into the @ledgerhq/connect-kit NPM package… every dApp using this dependency pulled the new version and served it to users. Among affected dApps were hey.xyz, sushi.com, zapper.xyz, and countless others.”

Hundreds of users lost funds before patches were applied. This shows how dApp-level attacks can scale: a single compromised library or XSS on a front-end can trick many users. Solutions include code audits, real-time monitoring (as Blockaid provides), and defense-in-depth: wallet companies often track known malicious payloads and warn users (e.g. SafePal’s Scam List or MetaMask’s phishing warnings). As MetaMask’s security team emphasizes, users should never “trust dapps” by default: they only trust what MetaMask itself displays. A recent MetaMask blog stresses, “Users never trust dapps… dapps can be malicious or compromised. They can make users believe they are signing a given payload when in fact they are signing something else”. In practice, always verify transaction details on the wallet’s own confirmation screen.

Consensus and Validator Threats

Ethereum’s PoS consensus introduces new risks around block producers (validators). Key issues include staking centralization, slashing and operator error, censorship, and finality delays.

Staking Centralization

If too much stake is controlled by a few entities, the network becomes vulnerable to collusion or exit scams. For example, liquid staking pools like Lido hold ~32% of ETH stake (as of 2025) and have a handful of node operators. If Lido’s operators colluded or were subpoenaed, they could orchestrate coordinated misbehavior (e.g., censor transactions). Decentralists worry that centralized stake pools undermine Ethereum’s promise of distributed consensus.

Developers should monitor stake distribution (Etherscan or staking dashboards) and encourage use of alternatives (Rocket Pool, StakeWise, P2P staking) to diversify. Clients also need to coordinate: official discouragements of centralization (e.g. not voting for proposals that overly favor one pool) are part of the conversation. There’s no direct exploit yet from stake concentration, but it raises systemic risk.

Slashing and Downtime Risks

PoS validators are slashed (lose ETH) if they sign conflicting votes or blocks. The ConsenSys blog explains Ethereum’s slashing conditions: signing two different blocks at the same slot, or double-signing attestations or proposals, are penalized. In effect, if a validator’s keys are compromised or two copies run simultaneously, their entire 32 ETH stake can be forfeited. In practice, slashing events remain rare (only ~0.04% of validators have been slashed as of 2024, mostly due to operator error). Still, operators must guard keys carefully (using remote signers, secure enclaves) to avoid accidental slashes. Even if honest, a large pool could pay a high penalty if one node misbehaves.

Downtime is less severe but can lead to minor penalties and lost rewards. If >1/3 of stake goes offline, the network can halt finality (see below). E.g., in May 2023 a lapse in participation caused an “inactivity leak” event. Over 60% of blocks were missed, causing ~1 minute block times and an activation of the inactivity leak mechanism. Offline validators gradually lost balance while the remaining nodes gained relative weight, re-establishing finality. As Etherscan’s TY blog notes, this was ultimately handled without user-visible impact, but it underscores that finality can stall if many clients fail simultaneously.

Censorship and Finality Delays

Validators can choose which transactions to include. Censoring (refusing to include certain transactions) is a hot topic post-sanction. In 2022, the US Treasury sanctioned Tornado Cash addresses. Shortly after, data showed over half of Ethereum blocks were excluding transactions from Tornado Cash addresses. Some validators (and MEV relays like Flashbots) decided to comply with sanctions, effectively blacklisting those TXs. As one commentator warned, if all validators collude, “they would eventually form the canonical, 100% censoring chain”. (In practice, around half the network continued including those TXs, so the chain did not split).

Proof-of-Stake exacerbates censorship risk: if >1/3 of active stake refuses to finalize blocks with censored transactions, finality stops. In theory, 2/3 majority is needed for finality, so if 34% of stake votes against, the chain can freeze (leading to fallback “inactivity leak” or manual governance). To date, Ethereum has not seen a real chain split, but validators and users remain vigilant. Flashbots data confirms that even with 50% compliance among block builders, censored TXs eventually get mined by the rest of the network.

Apart from sanctions, censorship can be used by colluding miners to extract more MEV (excluding competitors) or by attackers in a 51% scenario to drop certain txs. Clients discourage censorship (e.g. PonyZero in Solana ecosystem). Tools like MEV-Boost allow validators to switch proposers or relays, mitigating unilateral censorship. But developers should note that finality can be delayed if enough validators go offline or refuse to sign certain blocks. The ETH 2.0 spec’s inactivity leak mechanism handles this by slowly penalizing the offline group until the online group regains >2/3.

Re-orgs and Finality Risk

Finally, any attacker with a high percentage of stake could attempt a deep reorg. MEV research highlights “time-bandit” attacks: a validator might temporarily invest heavily in MEV, and later reuse that stake to rewrite history and capture past MEV profits. Though theoretical, this risk underscores the importance of long-term security (e.g. requiring thousands of confirmations for high-value transactions) and eventual algorithmic defences (like future VDF delays).

Bridge and Layer-2 Vulnerabilities

Cross-chain bridges and L2 scaling solutions introduce their own security models. We review notable bridge hacks and what they teach us.

Bridge Exploits (Wormhole, Ronin, Poly, etc.)

Bridges connect Ethereum to other chains (Solana, Polygon, Ronin, etc.). Security depends on proper message validation across chains. Several high-profile hacks illustrate the danger:

Wormhole (Feb 2022): The Wormhole bridge connected Ethereum and Solana. The hacker exploited a contract bug on Solana to mint 120,000 wrapped ETH (WeETH) without collateral. In dollar terms, roughly $320M was minted and stolen. Chainalysis explains that Wormhole’s contract allowed bypassing the signature check: the attacker “found an exploit in Wormhole’s code that allowed them to mint 120,000 wrapped ETH…without collateral”. Halborn’s analysis adds detail: the exploit involved a deprecated function on Solana that let the attacker masquerade a signature authority, bypassing verification. The takeaway is that bridges must strictly validate cross-chain signatures and block headers. Any flaw (forgotten validation, unsafe third-party lib) can destroy the peg.

Ronin Bridge (Axie Infinity, March 2022): Attackers (linked to Lazarus Group) obtained the private keys of 5 out of 9 Ronin validators by phishing an Axie developer’s network. With 5/9 key shares, they forged fake withdrawal transactions and drained 173,600 ETH and 25.5M USDC (~$620M). The technical root was social engineering plus the fact that Ronin required 5 signatures for a withdrawal, and those keyshares were poorly segregated. This shows how validator centralization (fewer, less-secure operators) can lead to catastrophic theft. Bridge systems often use a multi-sig or DAO model; here the single point of phishing the validator nodes was fatal.

Poly Network (July 2023): Poly Network, a multichain hub, had a vulnerability in its cross-chain validation. Halborn reports the attacker used “a malicious parameter including a fake block header and validator signature” to bypass Poly’s checks, effectively minting arbitrary tokens on various chains. In nominal terms, $43 billion of assets were minted (spread across 57 assets and 10 chains), though only ~$10 M could be withdrawn due to liquidity limits. This “verification bypass” pattern recurred in other hacks (e.g. Meter.io, Qubit). Bridges must rigorously validate that messages actually come from the source chain and authorized validators. Embedding fresh block headers and multi-sig signatures in proofs is common, but any flaw in that logic is fatal.

Others: Numerous smaller bridges (Multichain, xETH, etc.) have been drained by similar flaws (insufficient signature checks, trusting untrusted validators, etc.). In each case, auditors noted that “bridges are the top security risk”. The common learning: treat cross-chain messages as untrusted inputs and use hardened verification (e.g. on-chain confirmers, slow bridge waves, or entirely trustless proofs).

Message Replay and Spoofing

Bridges often relay messages via relayers or oracles. A risk is transaction replay or spoofing: an attacker intercepts a message sent to one chain and replays it (or a modified version) on another chain to fool the bridge. Proper nonce or hash checks can mitigate this. For example, always tying a cross-chain transfer to a unique transaction hash or requiring finality on the source chain before execution. When designing or using bridges, developers must ensure atomicity and uniqueness of messages to prevent double-spend across chains.

Optimistic vs ZK Rollup Security

Layer-2 rollups scale Ethereum by moving execution off-chain. There are two main models:

Optimistic Rollups (e.g. Arbitrum, Optimism): Assume L2 transactions are valid. After a batch posts to L1, anyone can challenge with a fraud proof if they detect an incorrect state update. This requires a challenge period (commonly 7 days) during which funds cannot be safely withdrawn without proof. The risk is if the sequencer/operator tries to publish an invalid state and no one notices (or if challengers are censored), bad state could be accepted. However, economic incentives (bounties for fraud proofs) generally make it safe. Withdrawals suffer a delay.

ZK Rollups (e.g. zkSync, Loopring): Use cryptographic validity proofs (SNARKs/PLONKs). Each batch is accompanied by a proof verified on-chain, so state transitions are guaranteed correct. In principle, this is stronger security: you don’t need trust, and withdrawals are quick. However, many ZK-rollups currently have a single sequencer node or permissioned committee that orders transactions. If that sequencer censors or goes offline, the rollup can stall (users may still withdraw using the on-chain proof, but new transactions halt). As Chainlink Education notes: “Zk-rollups typically rely on the base layer for data availability, settlement, and censorship resistance… Operators can be a centralized sequencer… or a PoS system where transaction execution is rotated among validators.”

Thus, ZK-rollups inherit Ethereum’s base layer security for data, but add a trust assumption: the sequencer runs honest code. If an attacker breaks the proving system (very unlikely) or the sequencer colludes, they might censor L2 activity. Rollup designers mitigate this by planning decentralized sequencers or fallback proofs.

In summary, rollup security best practices include having on-chain data availability (so even if operators vanish, users can still reconstruct state), thorough review of proof systems, and decentralizing sequencer duties over time. Developers building on rollups should understand each chain’s model: e.g. not trusting the L2 sequencer more than absolutely necessary, and designing contracts that can migrate or withdraw under consensus fallback.

Developer Defenses and Best Practices

Given these threats, Ethereum developers must adopt rigorous security practices:

Smart Contract Hygiene: Use battle-tested libraries (OpenZeppelin, SafeMath, ReentrancyGuard), and adopt coding patterns (checks-effects-interactions, use of modifiers for access control). Never assume functions will behave as expected; code defensively. Audits by third-party firms can catch subtle flaws – many high-profile projects (e.g. Uniswap, Compound) were audited before mainnet launch.

Testing and Analysis: Automated tools are invaluable. Slither (static analyzer) can flag issues like reentrancy or uninitialized storage. MythX and Mythril perform symbolic analysis for vulnerabilities. Echidna (by Trail of Bits) is a fuzz tester: you write “properties” (e.g. balances should never exceed deposits) and Echidna tries random transactions to break them. Hardhat/Foundry frameworks allow writing unit and integration tests. For example, using Foundry’s forge to fuzz test transfers can catch overflow bugs or logic lapses. A Mint candidate might test that after any transfer or deposit, the invariant totalSupply == sum(balances) always holds.

No tool catches everything. A comparative study found that traditional analyzers often missed complex bugs, and even ChatGPT (surprisingly) outperformed some analyzers on simple contracts. The takeaway: use multiple approaches. Incorporate static analysis, fuzzing, and formal verification as needed. For each new contract, run Slither, run MythX scans, write unit tests (covering edge cases), and consider hiring an external audit for production code. Continuous integration (CI) pipelines should fail on any flagged vulnerability.

Local vs Remote RPC Strategy: Whenever possible, run a local full node (Geth, Erigon, Besu) instead of trusting a third-party API. This prevents remote RPC censorship and gives you full data control. If a remote provider is used (for convenience or scale), code should handle failures (e.g. retrying on error) and use multiple endpoints (Infura + Alchemy + Pocket, etc.) to avoid dependence on one. The Infura outage example shows why fallback is critical.

Node Hardening: Secure your own Ethereum nodes. Advice includes: disable unsafe RPC modules (personal, admin, debug), use firewall rules (e.g. allow RPC only from your backend), use SSH keys and strong passwords, and keep software updated. The recommend practice is to serve RPC over TLS with client certs if public exposure is needed. Tools like ufw or iptables can enforce port restrictions. CompareNodes suggests whitelisting IPs and disabling dangerous methods for production RPCs.

Monitoring and Simulation: Platforms like Tenderly allow simulation of transactions against a fork of mainnet state, helping predict failures and debug state changes. Reorg/OracleSentinel-type services watch the network for anomalies (large pending sandwicher). Setting up alerts for unusual on-chain activity near your contracts can catch exploits early.

Key Management: For multisig and validator keys, use hardware security modules or hardware wallets. Never embed private keys in code. Use multi-sigs with quorum and timelocks for large treasury moves. Follow the principle of least privilege: a contract should only be able to mint/burn tokens when absolutely needed.

Upgrades and Patches: If your contract is upgradeable (via a proxy), maintain upgrade audits. Even if immutable, have an emergency withdrawal or pause function (with multi-sig) to halt contract in case a vulnerability is found post-launch.

Community Intelligence: Stay informed. Follow security news (Rekt.news, CertiK alerts, Immunefi disclosures). Learn from incidents: when hacks occur, read the post-mortems. Many projects share what went wrong in their code (e.g. Harvest Finance’s blog after their exploit). Knowledge of how attacks succeed is the first step to defense.

Developer Toolchain Example

For concreteness, a modern defense setup might be:

IDE/Editor: VSCode with Solidity plugins (solhint, solidity-coverage).

Testing: Foundry or Hardhat for unit tests in Solidity/JS, with Echidna fuzzing on critical functions.

Static Analysis: Slither run locally and in CI; integrate MythX as a GitHub check.

Audits: After development, have a professional audit. Incorporate audit tools’ automated suggestions (MythX, Certora, etc.).

Simulation: Before any mainnet tx, run it on Tenderly or forked Hardhat node.

RPC: Use infura or alchemy endpoints only for development; for production read-only, consider a load-balanced cluster of your own nodes.

The Ethereum ecosystem also provides official guidance (e.g. the Ethereum Security Guild’s best practices), and numerous checklists exist (Consensys Diligence checklist, OpenZeppelin secure contracts guide).

Conclusion

Ethereum’s open and composable nature invites both innovation and attack. This handbook has surveyed the major known risks: contract bugs (reentrancy, overflows, oracle design flaws, etc.), infrastructure assaults (RPC, eclipse, DDoS), miner/MEV manipulations, wallet-level phishing and supply-chain attacks, consensus-threats (centralization, slashing, censorship), and the pitfalls of bridges and rollups. Each topic is vast, and this article has only touched on key points and examples, but with extensive citations to case studies and research.

In practice, security in Ethereum is an ongoing process: code defensively, monitor continually, and assume attackers are always probing. By learning from each high-profile exploit (DAO, Wormhole, Poly, Ronin, Harvest, etc.) and leveraging the growing toolbox of analysis and simulation (Slither, Echidna, MythX, Flashbots, Tenderly), developers can make contracts and infrastructure far more robust. The synergy of good practices—from careful code review to running diversified nodes and using private transaction relays—forms a multi-layered defense. As the old crypto maxim goes, “don’t trust, verify”: always validate inputs, audits, and on-chain behavior.